Fine-tuning a GPT — Prefix-tuning, by Chris Kuo/Dr. Dataman

By A Mystery Man Writer

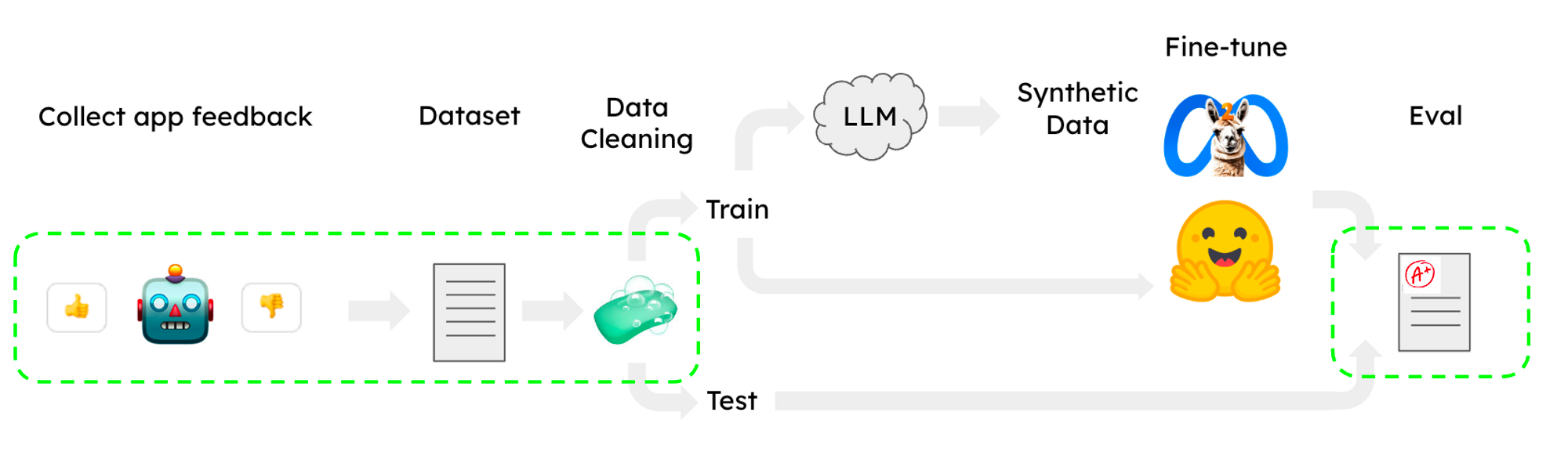

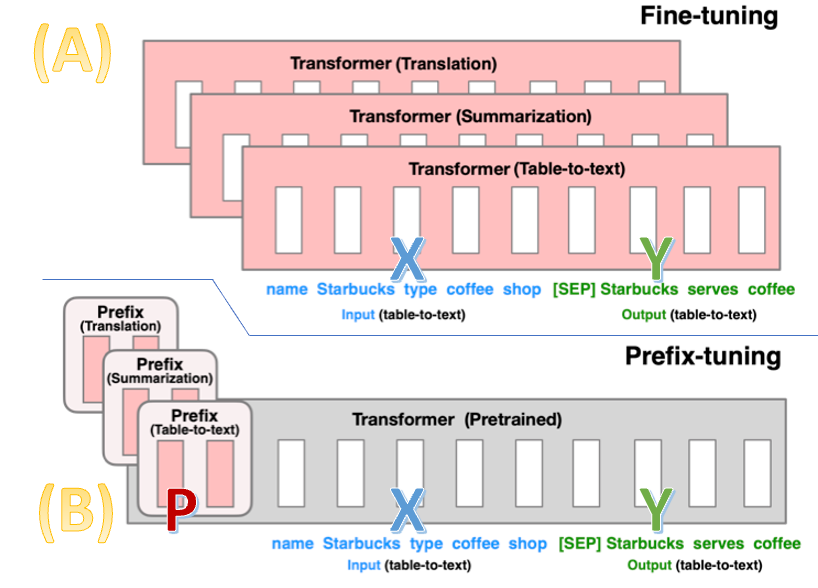

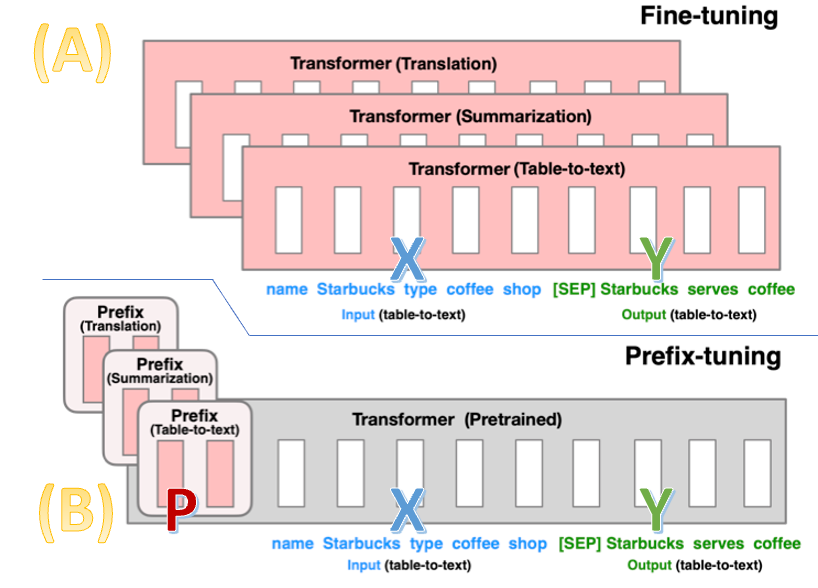

In this and the next posts, I will walk you through the fine-tuning process for a Large Language Model (LLM) or a Generative Pre-trained Transformer (GPT). There are two prominent fine-tuning…

Fine-tuning a GPT — Prefix-tuning, by Chris Kuo/Dr. Dataman

What is the specification for: 'num_virtual_tokens:20? @chris

List: Advances in AI/ML, Curated by Fakhri Karray

Time-LLM: Reprogram an LLM for Time Series Forecasting

List: LLMs - FINE TUNING, Curated by scitechtalk tv

Classes as Priors: a simple model of Bayesian conditioning for

List: LLMs - FINE TUNING, Curated by scitechtalk tv

Fine-tuning GPT-4 to produce user friendly data explorations (r

Understanding Parameter-Efficient LLM Finetuning: Prompt Tuning

Guide to fine-tuning Text Generation models: GPT-2, GPT-Neo and T5

Time-LLM: Reprogram an LLM for Time Series Forecasting

List: GPT_LALM_MMLU, Curated by Shashank Sahoo

Fine-tuning a GPT — Prefix-tuning, by Chris Kuo/Dr. Dataman