GitHub - bytedance/effective_transformer: Running BERT without Padding

By A Mystery Man Writer

Running BERT without Padding. Contribute to bytedance/effective_transformer development by creating an account on GitHub.

BERT Fine-Tuning Sentence Classification v2.ipynb - Colaboratory

What are transformer models, and how to run them on UbiOps - UbiOps - AI model serving, orchestration & training

default output of BertModel.from_pretrained('bert-base-uncased') · Issue #2750 · huggingface/transformers · GitHub

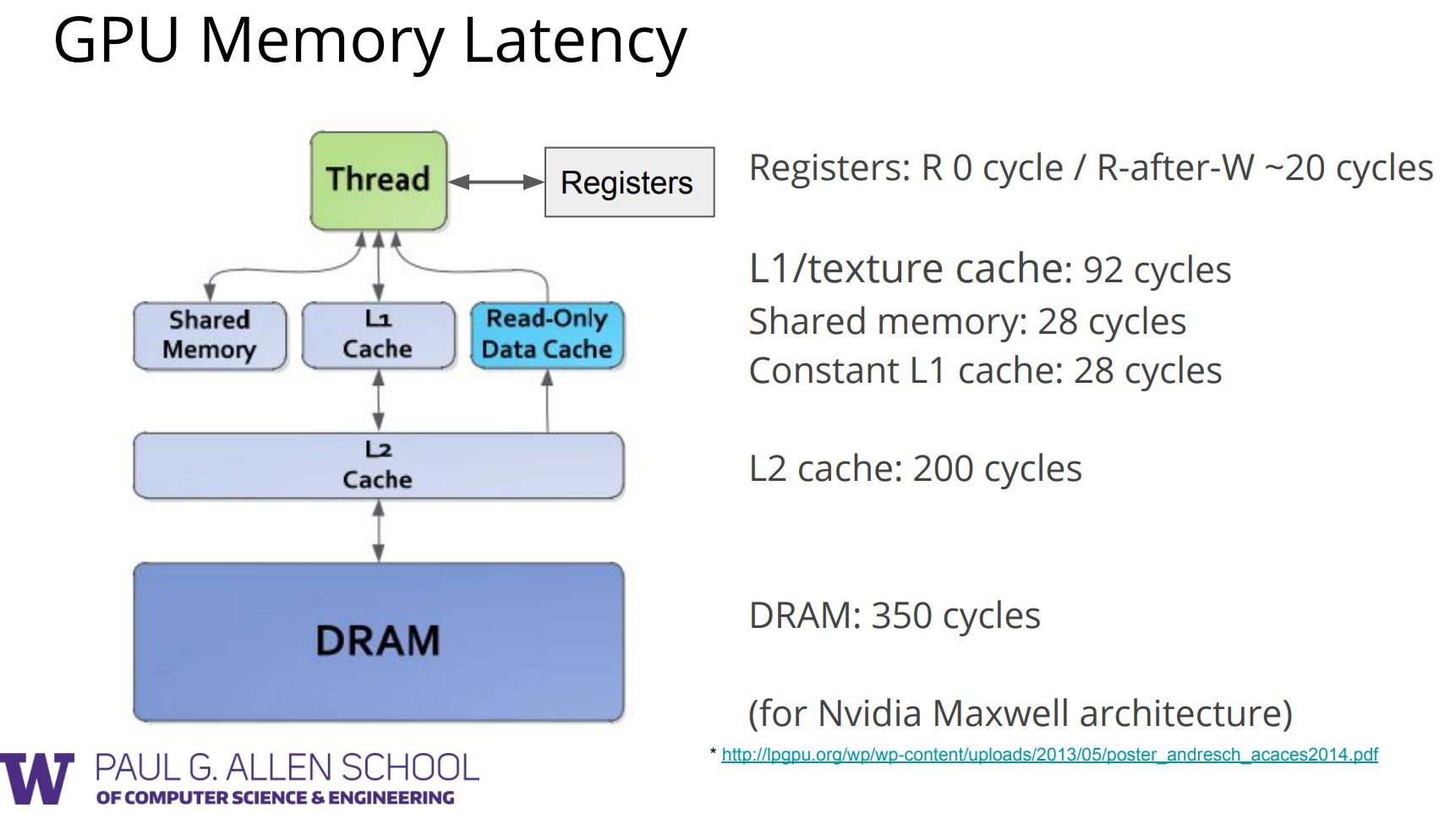

CS-Notes/Notes/Output/nvidia.md at master · huangrt01/CS-Notes · GitHub

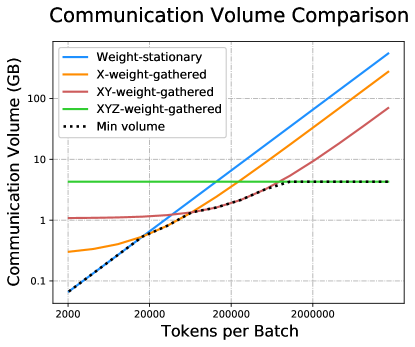

Full-Stack Optimizing Transformer Inference on ARM Many-Core CPU

2211.05102] 1 Introduction

transformer · GitHub Topics · GitHub

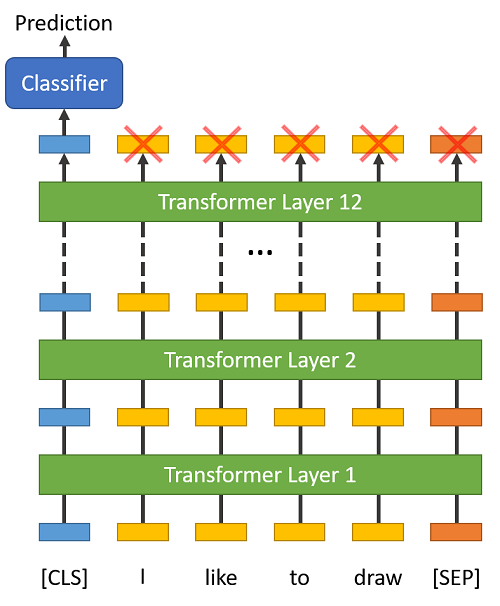

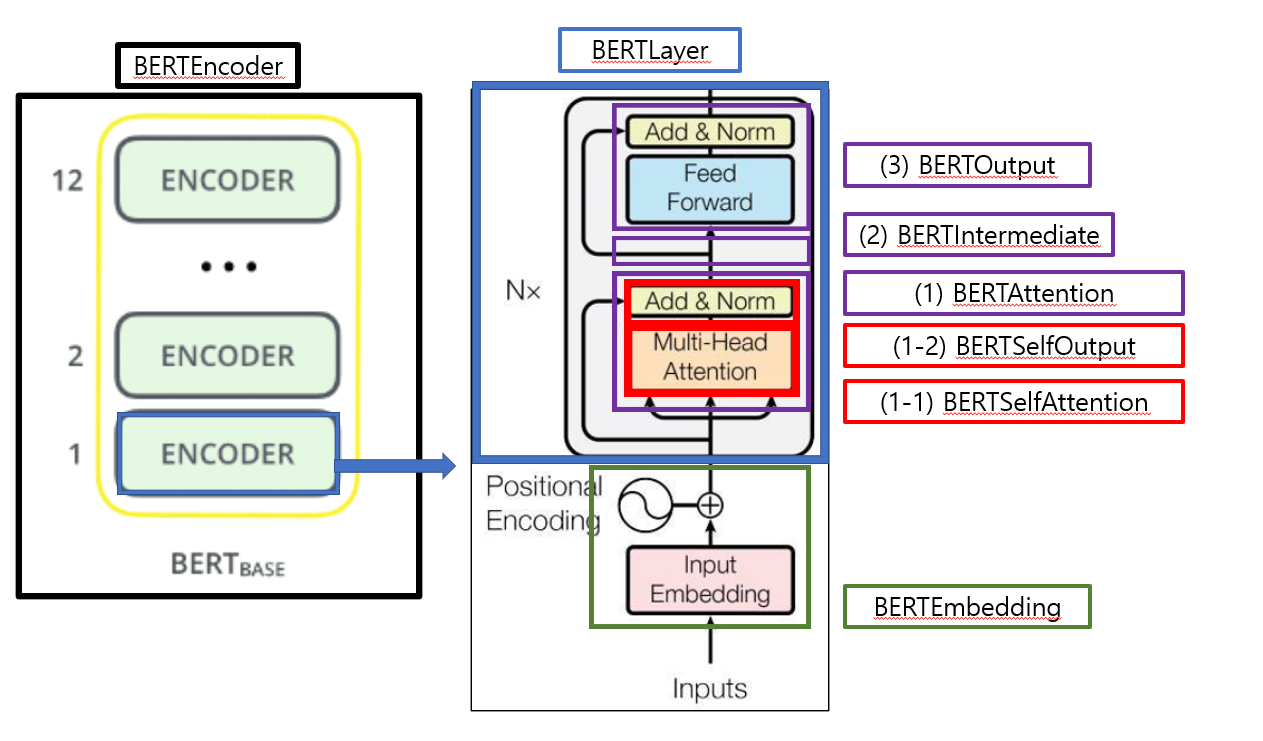

code review 1) BERT - AAA (All About AI)

YellowOldOdd (Yellow) · GitHub

inference · GitHub Topics · GitHub

Embedding index getting out of range while running camemebert model · Issue #4153 · huggingface/transformers · GitHub

Why only use pre-trained BERT Tokenizer but not the entire pre-trained BERT model(including the pre-trained encoder)? · Issue #115 · CompVis/latent-diffusion · GitHub

- Convolution operation without padding (a), and with same

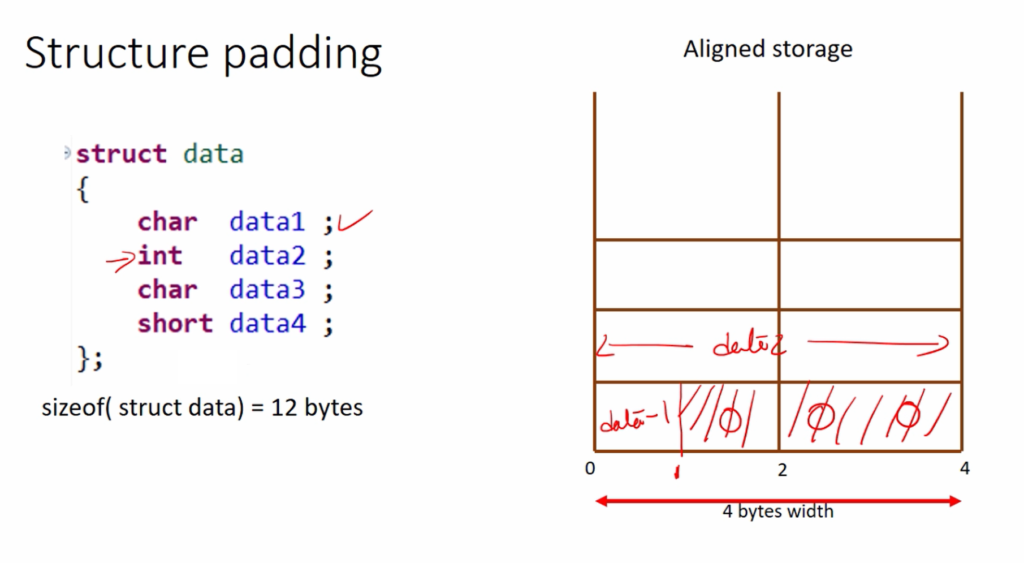

- Structure Size Calculation: With and Without Padding

- Zero Padding in Convolutional Neural Networks explained - deeplizard

- Tkinter Tutorial - Add Padding to Your Windows - AskPython

- Table Without Border in HTML 2 Types of Table Without Border in HTML

- Wx-101 Waste Toner Container for Use in Bizhub C220 C280 C360 Mur

- Invisible Adhesive Strapless Bra 2 Pack Sticky Push Up Silicone Bra For Women Backless Dress With Breast Lift Tape [free Shipping]

- Wall Art Print vinsmoke sanji quotes, Gifts & Merchandise

- Buy Long One Piece Western Dress At Wholesale at Rs.499/Piece

- Tattoo Series Padded Bikini Top - Roses and Dice - JiuJitsu Lifestyle Brand