Explainable AI, LIME & SHAP for Model Interpretability, Unlocking AI's Decision-Making

By A Mystery Man Writer

Dive into Explainable AI (XAI) and learn how to build trust in AI systems with LIME and SHAP for model interpretability. Understand the importance of transparency and fairness in AI-driven decisions.

What is DataCamp? Learn the data skills you need online at your own pace—from non-coding essentials to data science and machine learning.

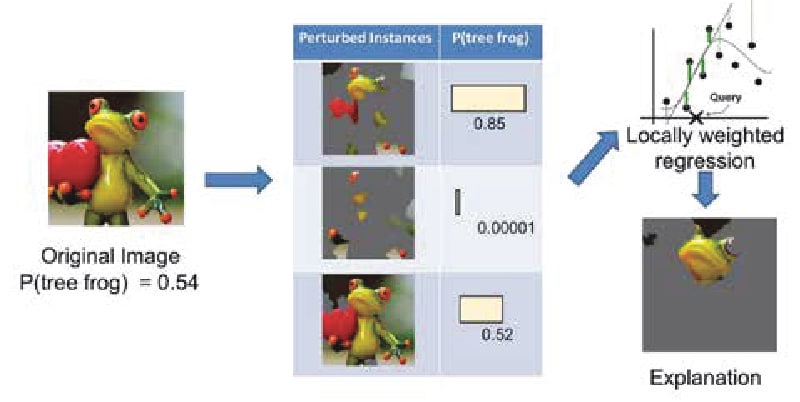

Explainable AI: Adapting LIME for video model interpretability, by Joachim Vanneste

Entropy, Free Full-Text

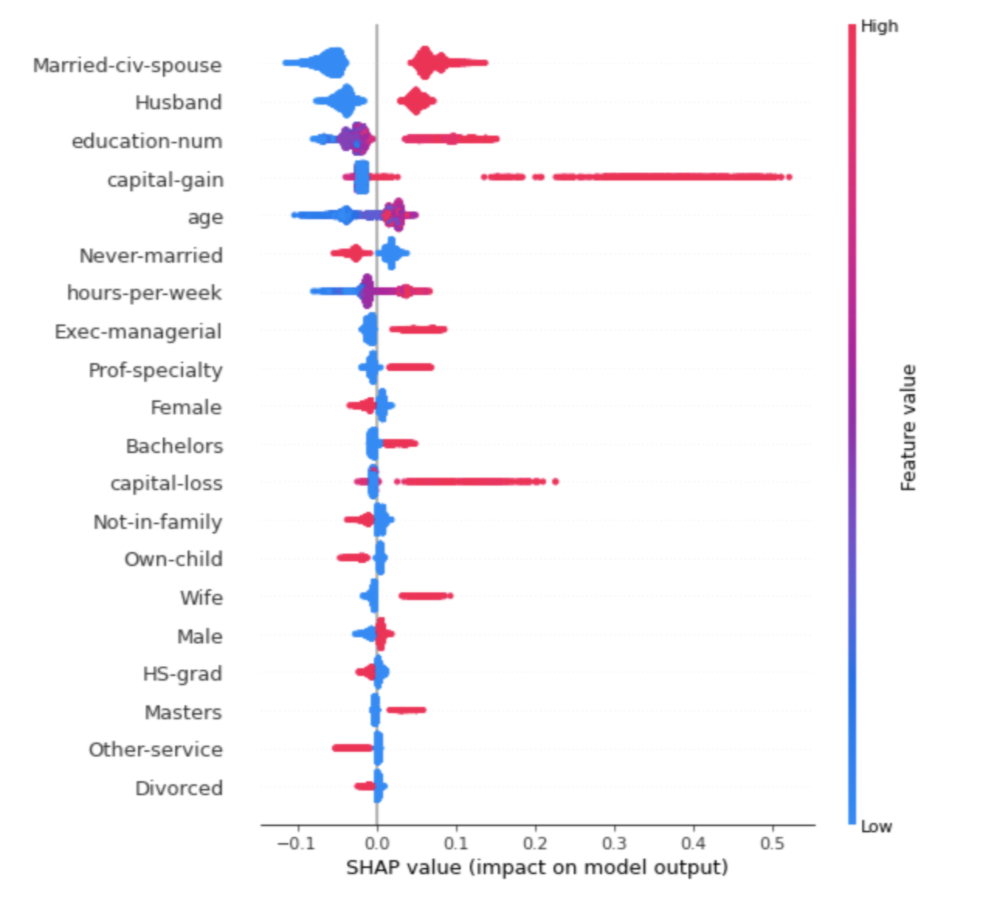

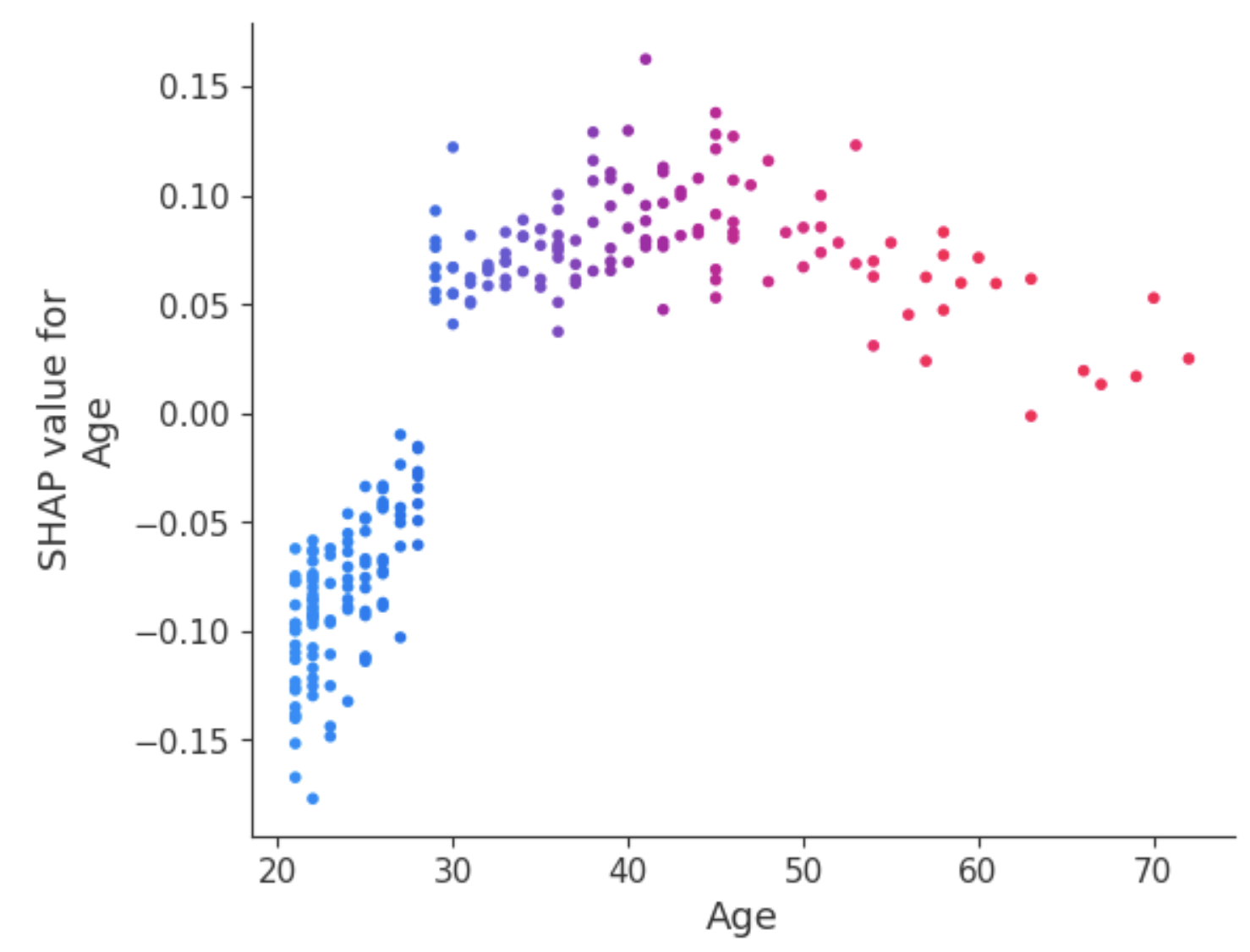

How to Interpret Machine Learning Models with LIME and SHAP

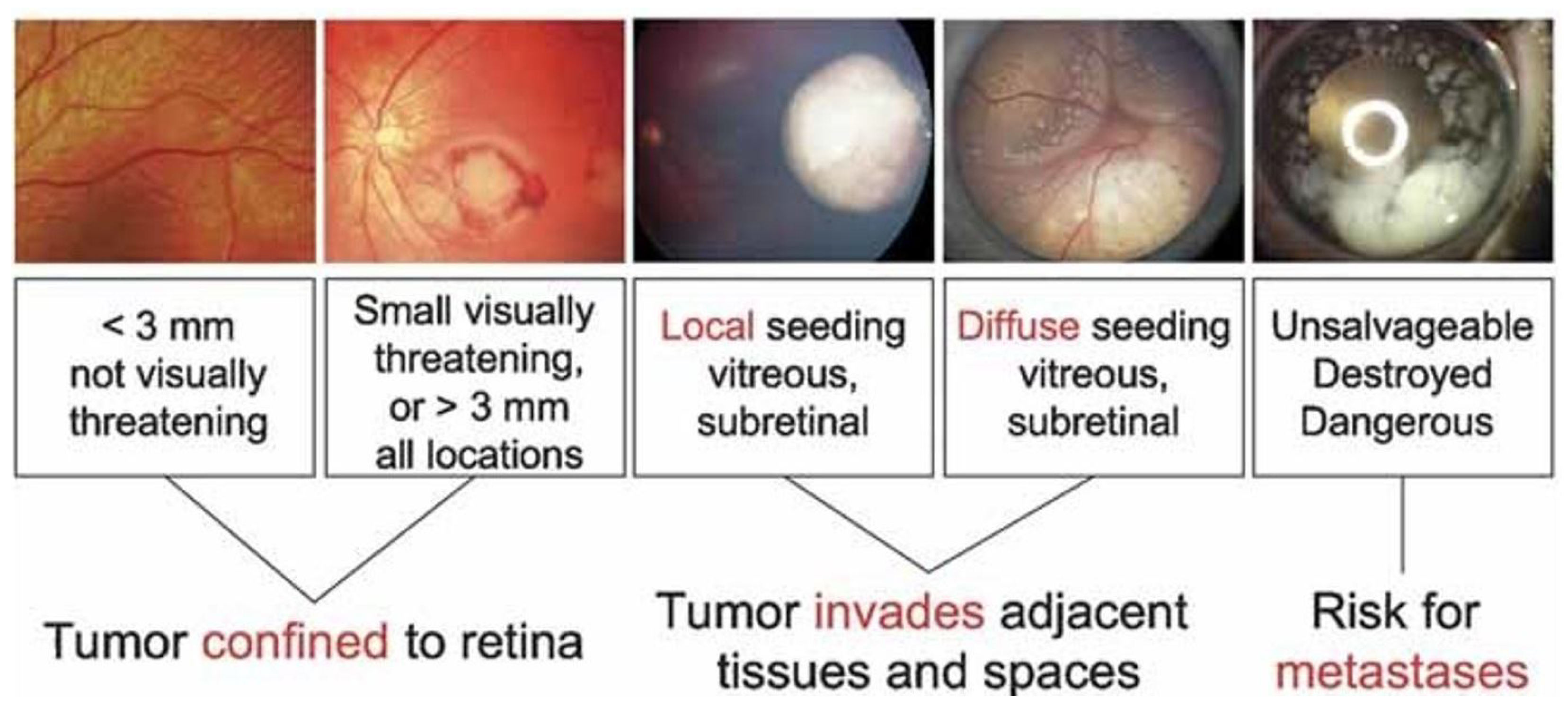

Diagnostics, Free Full-Text

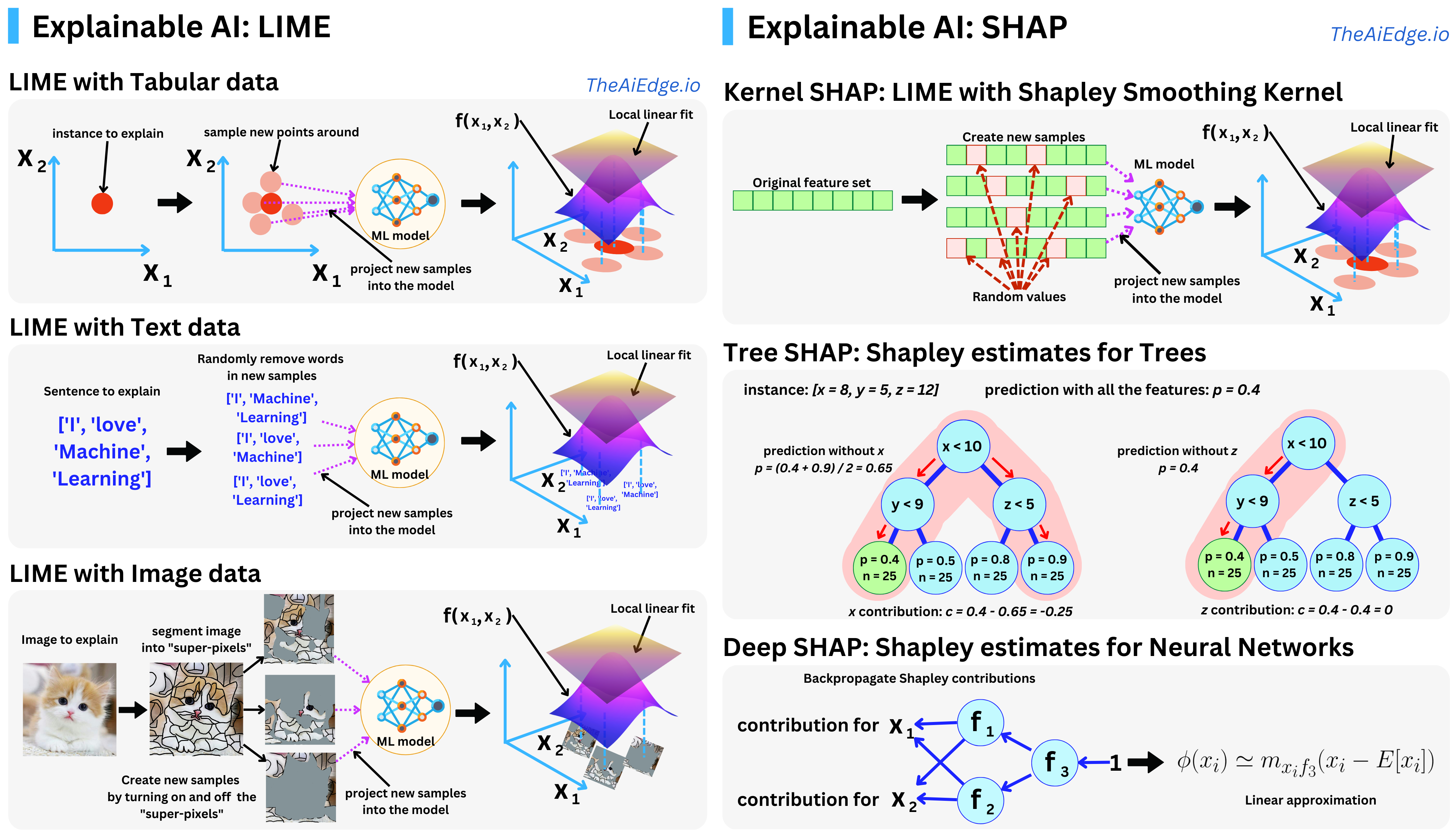

The AiEdge+: Explainable AI - LIME and SHAP

The AiEdge+: Explainable AI - LIME and SHAP

Comprehending AI model decisions with SHAP Explainers and feature influence plots

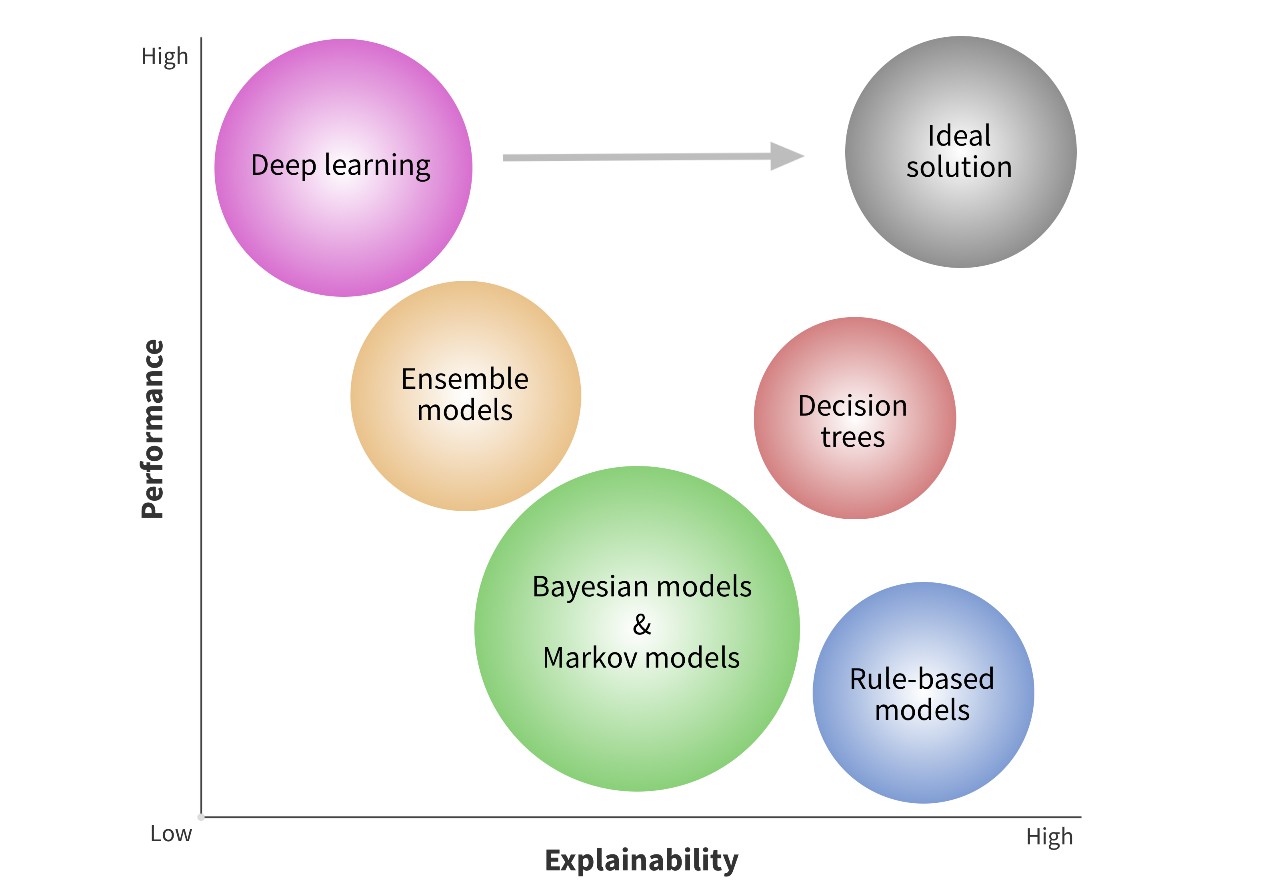

How to Make AI Models Transparent and Explainable

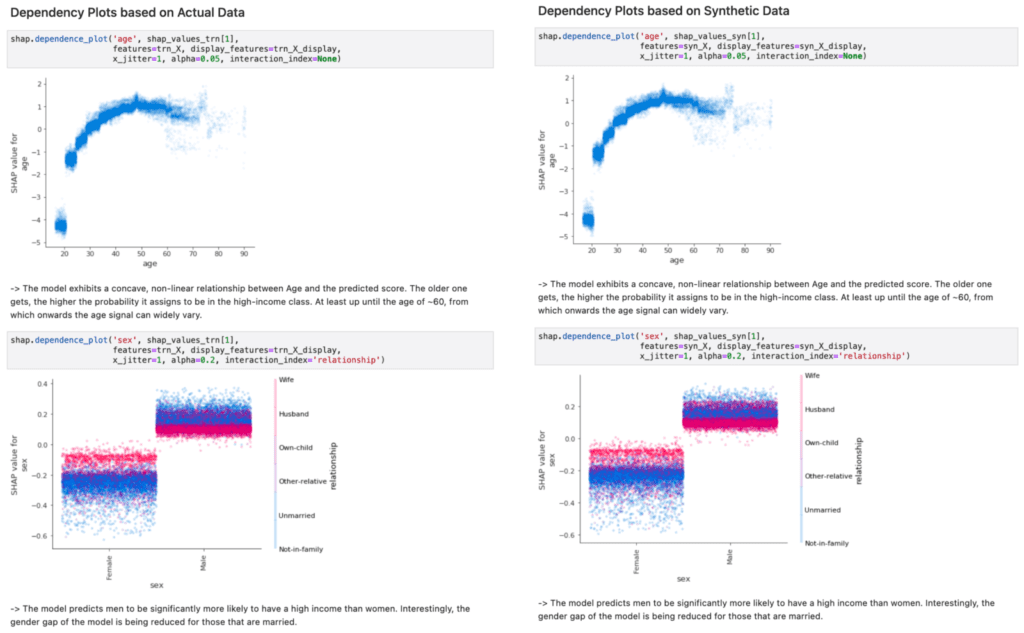

The Future of Explainable AI rests upon Synthetic Data - MOSTLY AI

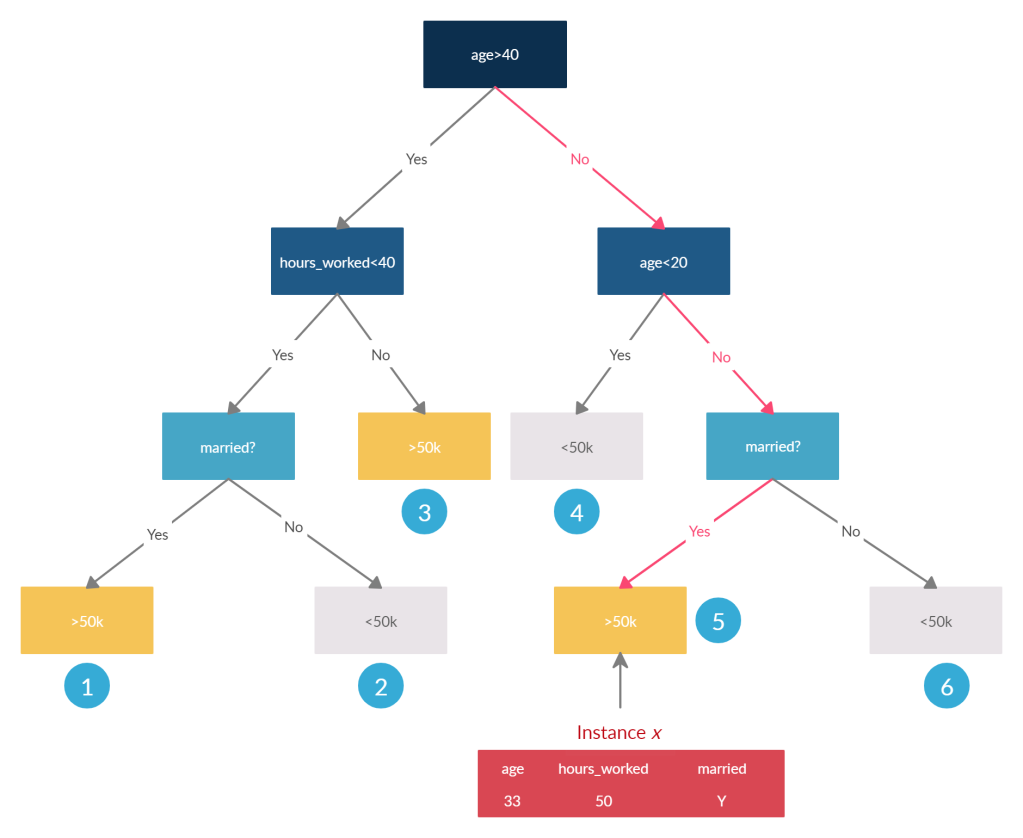

Interpretability part 3: opening the black box with LIME and SHAP - KDnuggets

Infosys Knowledge Institute Advanced Trends In AI: The Infosys Way

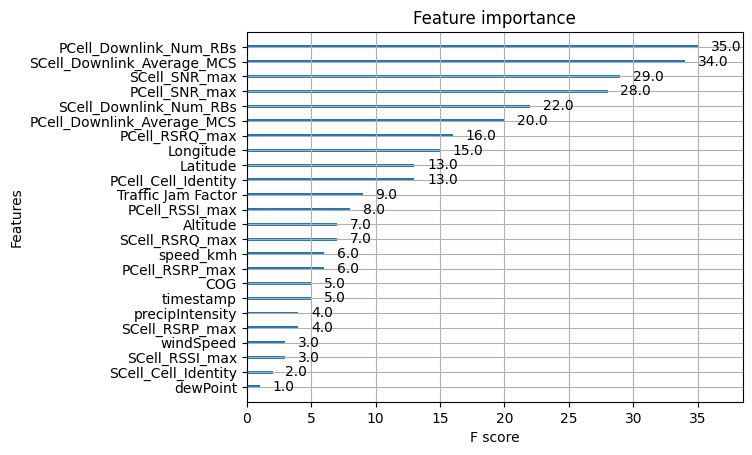

Unlocking the Black Box: Harnessing Explainable AI in Telecommunications, by Buse Bilgin, turkcell

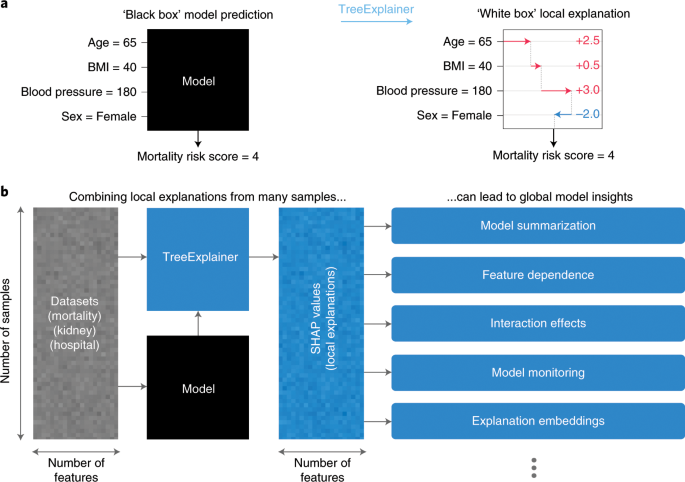

From local explanations to global understanding with explainable AI for trees

Explainable AI (XAI): Unlocking Transparency and Trust in Artificial Intelligence, by Lazy Sith

Conceptual diagram showing the different post-hoc explainability

- Happy Campers - AMA

- Holiday Party Essentials - Lingerie for Party Dresses

- I Slipped On My Beans Funny Bluey T-Shirt, Bluey Shirt, Bluey Disney Shirt, Bluey Shirt For Girls - Printiment

- Easter Leggings Cute Blue Purple Floral Pattern Easter Egg Womens

- Flux 2-in-1 Shorts - White | Workout Shorts Women | SQUATWOLF