MPT-30B: Raising the bar for open-source foundation models

.png)

By A Mystery Man Writer

Cloudflare R2 and MosaicML: Train LLMs on Any Compute with Zero

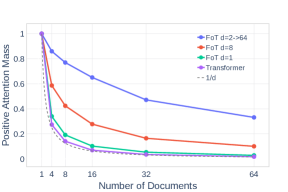

2307.03170] Focused Transformer: Contrastive Training for Context Scaling

Why Enterprises Should Treat AI Models Like Critical IP (Part 1)

MosaicML Releases Open-Source MPT-30B LLMs, Trained on H100s to

Jeremy Dohmann on LinkedIn: Introducing MPT-7B: A New Standard for Open-Source, Commercially Usable…

MPT-30B: Raising the bar for open-source foundation models : r

Announcing MPT-7B-8K: 8K Context Length for Document Understanding

Computational Power and AI - AI Now Institute

MPT-7B-8K 발표: 문서 이해를 위한 8K 문맥 길이 (Announcing MPT-7B-8K: 8K Context Length for Document Understanding) - 읽을거리&정보공유 - 파이토치 한국 사용자 모임

Is Mosaic's MPT-30B Ready For Our Commercial Use?

GitHub - OthersideAI/llm-foundry-2: LLM training code for MosaicML foundation models

- Ventilador de Mesa MONDIAL 110V, 30cm, 6 pás, Super Power - VSP-30

- Ventilador de Mesa Mondial Super Power VSP-30-B - 30cm 3

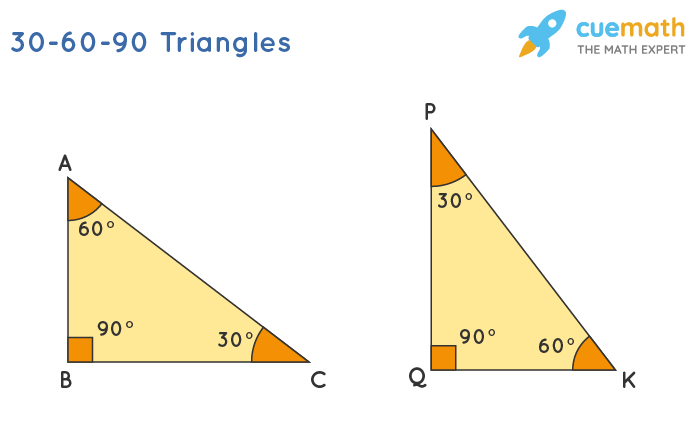

- 30-60-90 Triangle - Rules, Formula, Theorem, Sides, Examples

- Ventilador de Mesa Mondial 6 Pás 30cm VSP-30B 60W

- Ventilador De Mesa 30Cm Super Power VSP-30-B 60W 127V Mondial - Preto