Friday, Sept 20 2024

What's in the RedPajama-Data-1T LLM training set

By A Mystery Man Writer

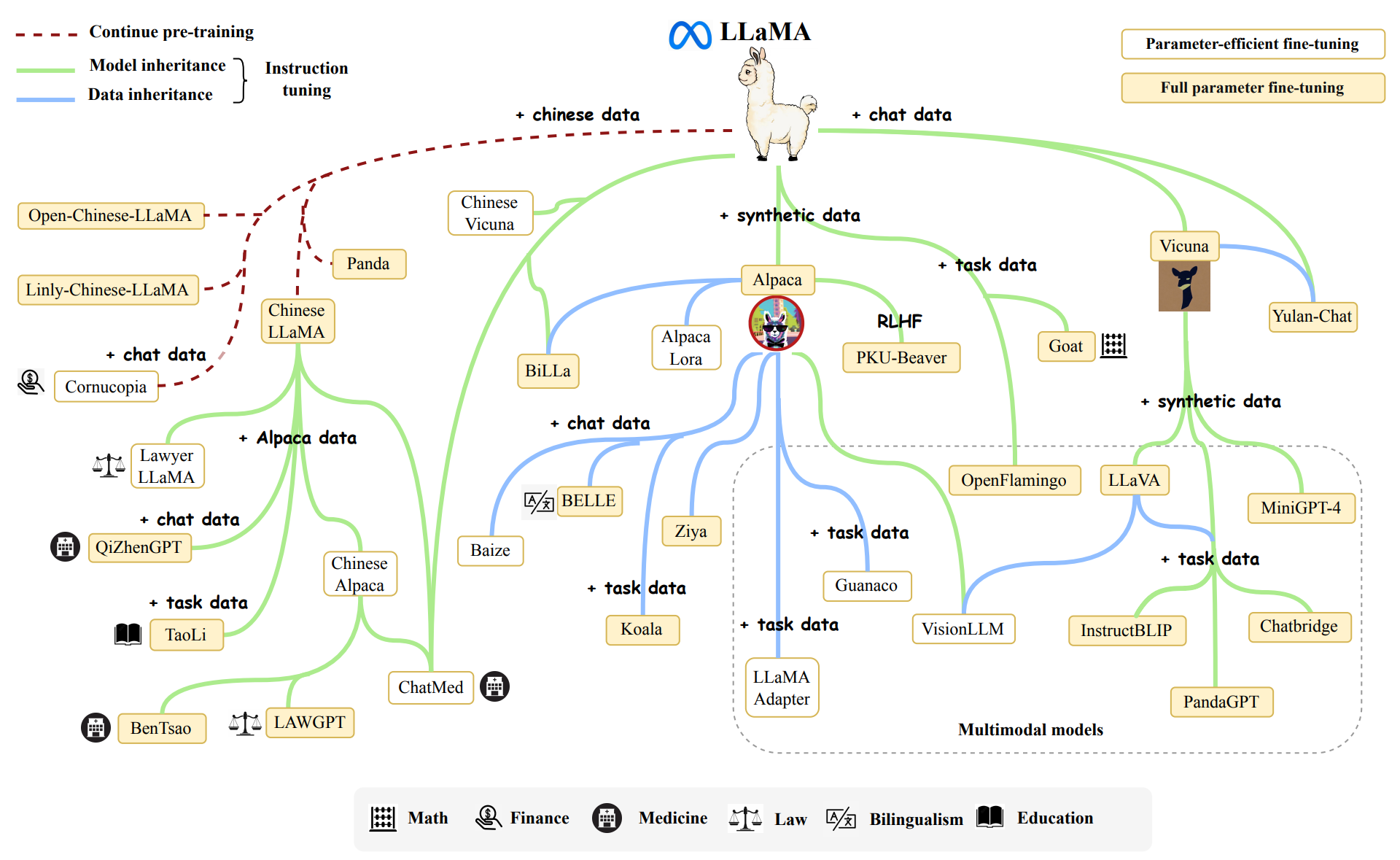

RedPajama is “a project to create leading open-source models, starts by reproducing LLaMA training dataset of over 1.2 trillion tokens”. It’s a collaboration between Together, Ontocord.ai, ETH DS3Lab, Stanford CRFM, …

What's in the RedPajama-Data-1T LLM training set

togethercomputer/RedPajama-Data-V2 · Datasets at Hugging Face

LLM360, A true Open Source LLM

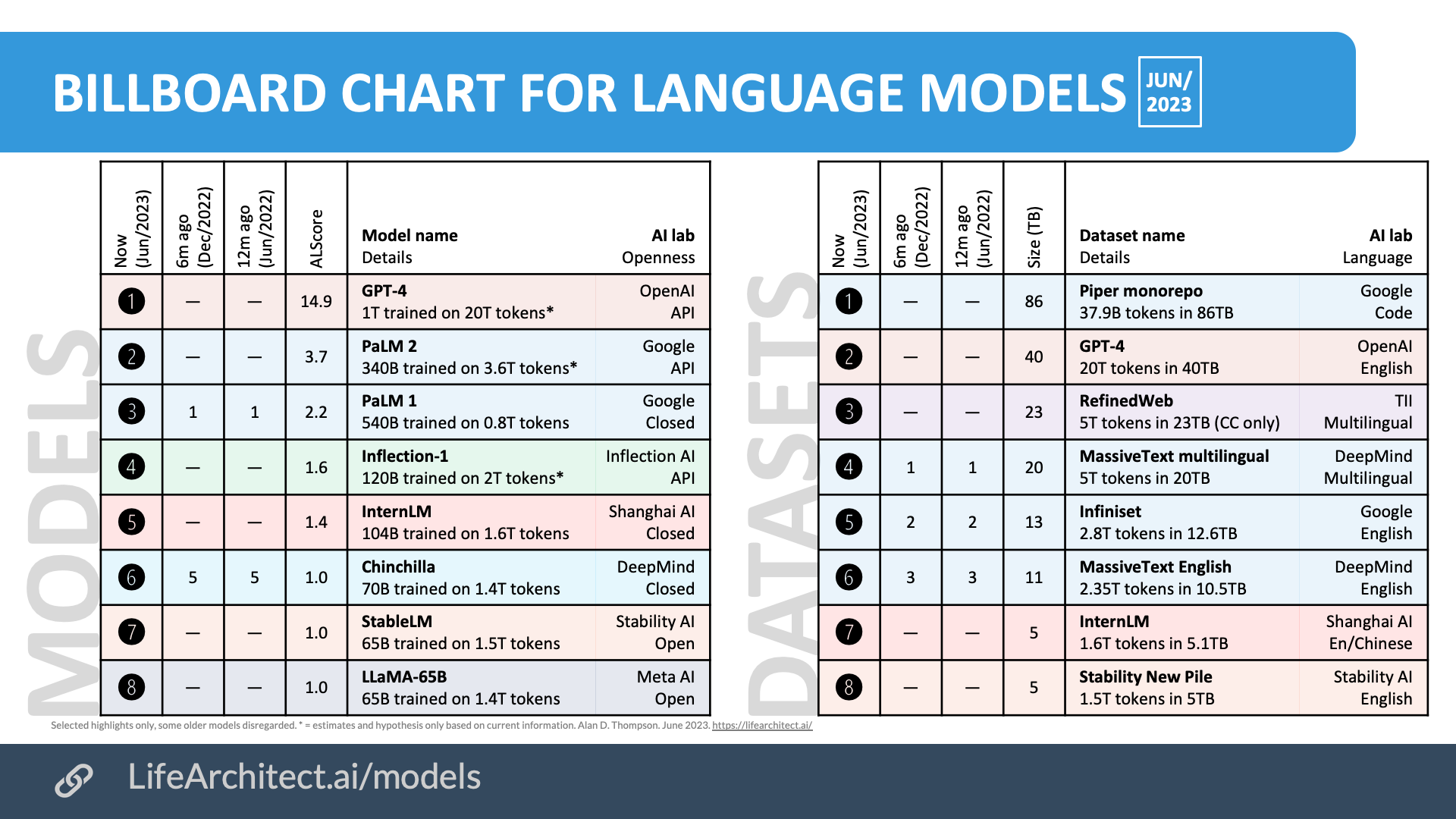

Inside language models (from GPT to Olympus) – Dr Alan D. Thompson

65-Billion-Parameter Large Model Pretraining Accelerated by 38

GitHub - togethercomputer/RedPajama-Data: The RedPajama-Data

Inside language models (from GPT to Olympus) – Dr Alan D. Thompson

Web LLM runs the vicuna-7b Large Language Model entirely in your

Bringing LLM Fine-Tuning and RLHF to Everyone

Related searches

- Llama Llama Red Pajama Color Words Activity

- Red Pajama

- Lulu's Fancy Red Women Satin Pajama Set, Shorts and Top Set, Christmas Pajamas, Nightgown, Sexy Pjs for Women, 2 Piece Set, 2 Color Options - Canada

- HOT PILLXIOWGEWRH 601] Red Pajamas Sets Women Nightwear Pajamas Fashion Female Solid Color Long Sleeve Blouse Pants Set Sleepwear Long Sleeve Pajamas

- 18,600+ Christmas Pajamas Stock Photos, Pictures & Royalty-Free

©2016-2024, travellemur.com, Inc. or its affiliates